2.3. Suite-modelling, PlanetEngine and Everest¶

A marked advantage of Underworld over other codes is the exposure of the Python-level API, transforming a geodynamic numerical modelling code into a fully-featured platform for geodynamics-related application development. One product of this has been the UWGeodynamics application, which thoroughly streamlines the design and construction of fully-dimensionalised lithospheric-scale models for precise and accurate recreation of real-world scenarios [Beucher et al., 2019], taking the already impressive ease-of-use of the standard Underworld interface and refining it still further for a particular use case.

Just as the particular challenges of lithospheric-scale modelling demanded and informed a bespoke tool, so have we found it necessary to invest in a unique software platform to support our idiosyncratic use case. The product has been two new pieces of research software, both open-source and freely available for the community to use, collaborate on, and iterate: PlanetEngine, an Underworld application for whole-mantle modelling in the annulus; and Everest, an HDF5-based data format and associated tools for numerical exploration across potentially any field of science.

Here we will discuss the particular dimensions of the research problem at hand and describe the peculiar methodological framework and vocabulary we have developed in response. We will then provide an overview of the architectural solutions we have ventured and discuss some of the higher-level ‘big data’ methods which the new format now supports.

2.3.1. A conceptual meta-model for suite-modelling¶

What kind of thing is a planet? This, broadly stated, is the parent question from which all other research questions presented in this thesis derive. Though our immediate contribution must necessarily be limited and contingent, the methodologies we advance here reflect a genuine attempt to formulate a tractable framework that could eventually embrace a solution to this thorniest of planetary problems.

For this thesis, the term ‘suite-modelling’ is defined so as to encompass any modelling enterprise in which the primary research output derives from an analysis of the divergent behaviours of a series of models, rather than the particular outcomes of each. A model series of forty or four-hundred or four-thousand particular strike-slip zones done with the sole intent of, say, building a catalogue or database of tectonic scenarios, would not necessarily qualify as a suite-modelling exercise according to this rubrik. Conversely, a survey of only four strike-slips might qualify if the purpose was to attain a new general inference about strike-slips which emerges from and transcends the behaviour of any individual case. Suite-modelling in this sense is an outgrowth of, and shares common methods and objectives with, the sorts of first-order analytical approaches discussed earlier in this chapter: it is the pursuit of analytical truth by empirical means.

To understand the scope of this challenge, it is instructive to consider perhaps the simplest possible example of a thermal convection model: a box with two insulated vertical walls and two horizontal walls of fixed temperature - one cooler, one warmer - containing some incompressible fluid whose nature we wish to scrutinise.

Let us suppose that insulated walls of our box are such that the temperature very rapidly equilibrates across each of the two walls, but only gradually equilibrates with the fluid, so that the wall temperature at any point is the spatiotemporal average (over certain wavelengths) of the fluid temperature adjacent to the wall. For this system, the only variables that we can control are the absolute temperatures of the two surfaces, or equivalently the gradient between them and a reference temperature, while the only variables we may observe are the temperatures of the two insulating walls.

Such an apparatus is the conceptual equivalent of a parameterised two-dimensional thermal convection model. From first principles, it can be seen to comprise:

A description or intention, which for this thought experiment is essentially just the previous paragraph. This we will term the ‘schema’ after Kant [Kant, 1781]. There are infinitely many conceivable schemas which relate to each other through uncountably many dimensions, collectively defining a kind of ‘schematic space’ of which any particular schema is a vector.

The implementation, which involves a number of decisions which imperfectly reify the system into a usable form. These decisions have a first-order influence on the behaviour of the system - however, they are not properly a part of the system as such, and whatever influence they do have that does not directly serve the systemic intention is a limitation or shortcoming of the model which must be accounted for. The implementation is made up of some amount of irreducible experimental capital, like the box itself and the room it is held in, as well as some number of variable quantities, such as the thicknesses of the walls. The former we term the model ‘capital’, the latter the model ‘options’. Ideally, both the capital and the options of implementation disappear into the background, as when a sufficiently high-resolution model becomes indistinguishable from the equivalent analytical treatment. In practice, there is always a bleeding-over from implementation to conceptualisation; consequently, though the implementation details should ideally not enter into the final analytical formulations, they remain inseparable from those outcomes and - for the sake of completeness and reproducibility - must always travel with them as metadata.

It has two ‘system variables’: the temperatures of the opposing thermal walls. These variables can be assigned any of infinitely many values and must ultimately emerge in the final treatment as terms of any complete theorem for the model behaviour. Let us call these the ‘parameters’ of the model. The parameters are the degrees of freedom of the schema, so that the schema itself can be visualised as an infinite Cartesian plane with the parameters as axes: the ‘parameter space’. Upon this space, a specific ‘parameterisation’ can be represented as a vector, while a sequence of parameterisations is figurable as a curve. We will term any complete parameterisation a ‘case’ of the schema: a place in parameter space as indicated by a parameterisation vector. It is equivalent to the box from our thought experiment after the wall temperatures are set but before it is filled with fluid.

It has two ‘state variables’: the temperatures of the insulating walls, which are the finest metrics we are permitted to access for defining the interior behaviour. We will term these two variables the ‘configurables’ of the system, and any given set of values as a ‘configuration’ of the system defining a particular ‘state’ that the system can achieve. Just as the parameterisation was conceptualised as a vector through parameter space, so may we regard the configuration as a vector through ‘state-space’. Before configuration, a given system is everywhere and nowhere in state-space. In the example of our box experiment, the apparatus is ‘configured’ by pouring fluid into it; we may do this carefully, and hence know what configuration we are selecting, or carelessly, in which case we have no idea where in state-space we are placing the system. (One important consequence of the way we have described this concept is that all cases share the same state-space; a configuration in one case is equally conceivable in any other, allowing models to ‘move’ in case-space as readily as they move between states.)

According to this vocabulary, our box experiment can be described as follows. First we take the concept of the experiment and assemble the appropriate capital, carefully noting several model options whose quantities we have implicitly or explicitly decided along the way, such as the conductivity of the wall plates. We then ‘place’ our apparatus at a particular point in parameter space by setting the temperatures of the two thermal walls, thus choosing a fixed model case to explore. We then fill the box with fluid, thus ‘configuring’ it - at this stage we are of course careful to note the time. At last, we step back from the apparatus and allow it to ‘iterate’, taking occasional measurements of the two state variables along the way. Eventually, some termination condition is reached, and the experiment is ceased. Being good scientists, we again note the time before breaking for lunch.

What has unfolded here? The model has in effect travelled from one configuration in state-space to another. Along the way, the model has traced out a curve of intermediary configurations through state-space - a curve whose form we are made partially aware of by way of our observations. Specifically, this curve is a parametric curve, i.e. a vector-valued function of time. We could imagine visualising it by plotting it against the two free wall temperatures, with the time since configuration represented by colour. Unlike any arbitrary curve which we could draw over state-space, this curve is very special: it is the unique, unbidden tendency of the model, arising solely from its own natural logic. Such a trajectory we will term a ‘traverse’.

What sort of knowledge do we hope to attain with such an exercise? If the model were taken to represent an interesting scenario in itself - for example, coolant flowing through an engine - then we have our answer: we wanted to know how such-and-such a thing would behave in such-and-such a situation, and now we know. But if our purpose is to understand the model itself, the outcome of any given experiment is not important. What we are really interested in is the traverse itself - and what the traverse tells us about the surface over which it extends.

We beg the indulgence of another thought experiment. This time, imagine we are on a causeway high above a dark and endless sea. We know that the surface of the sea is in motion, but we cannot perceive it directly. All we can say for sure is that the pattern of the currents is always the same. How can we ascertain what forces ultimately motivate the churning of the waters?

One option would be to follow the example of Winnie the Pooh [Milne, 1988] and pepper the surface with little twigs. As each twig travels with the current, tracing out a single streamline, we carefully plot its position - northings vs eastings, say. It may be that we find that some twigs eventually come to rest over a downwelling, too buoyant to sink. Others may fall into ever-revolving loops, or conversely, reveal pathways that seem to continue endlessly. Over time we gradually build a sense of the overall nature of the current that is ever improving - and ever insufficient. For what is certain is that eventually we must stop casting sticks: it will never be possible to completely sample the infinite sea, nor even any region of that sea, even with infinite time - nor even infinite sticks.

Let the sea represent state-space. The twigs are individual instances of our box model, with the eastings and northings representing the values of the two state variables; the paths traced by the twigs are individual traverses of a particular case of the model. We see now that a given case of a model is in fact a function that maps flow vectors to coordinates in state-space - what we shall hereafter call the ‘case function’ C:

Where \(c\) stands for a ‘configurable’, or a variable of state, and \(u\) is the ‘velocity’ in state-space. For the dark sea, the configurables are the eastings and northings of every point on the surface of the waters, while \(u\) is the flow vector at that point; for the box convection model, these are instead the temperatures of the non-thermal walls and the future temperature of those walls after time \(t\).

A written statement of \(C\) would be equivalent to a complete analytical solution for the convection problem set out by this particular case. Obtaining such a statement would obviate any need to ever model that particular case of that particular schema again. Of course, such a scientific triumph is rarely achievable even in that small subset of cases where it is strictly possible. Even a partial solution over a limited interval would be conjectural at best: not only have we taken finite samples, but each sample was taken over a finite time interval - we would require a space-filling curve of observations, something only possible at infinity.

A more tractable approach would be to draw a ‘watershed’ diagram, ‘colouring’ - so to speak - each point in state-space according to the common limiting behaviours that models initialised at those points ultimately exhibit. For example, if it is observed that a particular minimum or loop of minima exists in state-space, one might characterise all instances culminating in that vicinity as belonging to a single ‘basin’ of the model. Somewhere in the unknown region between two basins must lie one or more ‘ridges’ and zero or more unobserved basins. By fitting curves through all such regions, one might produce a family of estimates of approximations of partial solutions to the case function - a contingent victory, but still a valid one. Better still would be to design a sampling strategy around such an analysis, so that the ridges - chains of bifurcations in state-space - are aggressively sought rather than merely inferred.

Let us return to the dark sea, where we now observe that there has been a change in the wind. We repeat our experiment and find that the motion of the water is quite different from before. We infer that the flow is in fact controlled by two forcings. The first is constant: the shape of the sea bed we cannot perceive. The second is variable: the direction and strength of the wind. We resolve to track the wind with two further variables (either the wind vector components or the wind’s trend and magnitude) and commit ourselves to what now emerges as the true scientific challenge: the charting of the obscured sea floor by proxy of the ocean currents.

The two new ‘wind’ variables are the system variables of the dark sea. In the example of our box convection model, they correspond to the temperatures of the upper and lower thermal walls; while the unseen ocean floor corresponds to the enigmatic nature of the fluid inside the box, which it is our ultimate purpose to discover. The ranges of the system variables, as we have discussed, define ‘case-space’, the set of all case functions \(C\). In this sense, the schema itself could now be thought of as a higher-order function - the ‘schema function’ \(S\), whose outputs are case functions:

Where \(p\) stands for ‘parameter’, the name we have chosen for system variables.

Given the difficulties already discussed with respect to \(C\), we must expect that a complete written expression for \(S\) will be either infeasible or impossible to obtain for all but the most trivial schema. Nevertheless, we are committed to bettering our knowledge of \(S\) by some means, for to understand it is to understand the schema as a whole. What we require is some means of collapsing the dimensionality of the problem.

One option would be to apply some sort of reduction to \(S\):

Where \(R\) is an operator that accepts the case functions \(C\) returned by \(S\) and itself returns some statement or metric of \(C\) which summarises its first-order features. A familiar example of such a reduction would be the concept of ‘phase’ in the physical sciences. The phases of water - solid, liquid, gas, supercritical fluid, et cetera - are in effect statements regarding the form that water should be expected to take, at steady-state, given certain values of the two system variables, temperature and pressure: phase, in other words, is a reduction over the state-space of water. Extending this notion, a phase diagram can be thought of as a kind of reduced schema function, \(S_{\mathbb{R}}\), which maps the degrees of freedom of \(S\) to the outputs of \(R\).

A completely different approach to the same problem is represented by Hertzsprung-Russel (HR) diagram, which organises stars according to their colour and brightness. This is equivalent to taking randomly selected states from randomly selected traverses of randomly selected cases of the schema and plotting them according to some consistent reduction over each state - in this example, the average surface temperature (colour) and the stellar disc area (brightness) of an arbitrary sample of observed stars. In effect, the HR plot is a kind of multi-case, discontinuously and stochastically sampled, shared state-space. The parameters of the HR schema are stellar mass, total internal energy, and relative elemental abundances, which - being a priori unknown - were not available as axes to organise the observations of stars. Instead, these parameters, along with the missing time dimension and many other symmetries, became apparent a posteriori as clusters in shared state-space. (Principal Component Analysis is an example of how such clustering can be detected algorithmically, and for arbitrarily many dimensions to boot.) The HR diagram has understandably become a bellwether of astrophysics, as canonical in its field as phase diagrams are in chemistry.

A third method has recently become available with the advent of modern machine learning techniques. ‘Page-ranking’ algorithms commonly work by representing each web page as a ~100,000-dimensional vector in lexical space, where the vector components are relative word frequencies. Network diagrams of semantic relatedness are constructed by determining distances through the resulting hypercube. These networks can then be tagged with metadata, equivalent to system variables or ‘parameters’ in our treatment, and used to study the evolution of online knowledge systems. Image recognition algorithms work on a similar principle but with the addition of intermediary layers. The ‘configurables’ as such are the RGB values of each pixel, while the ‘parameters’ are the categories or ‘tags’ associated with each image (usually by a human); e.g. ‘dog’, ‘cat’, or ‘truck’. Each case implies infinitely many configurations, yet there is evidently some symmetry in state-space for each case that uniquely implies its parameters. To detect these symmetries, the state- and case-spaces are interleaved with one or more ‘latent spaces’ of much lower dimension than either, revealing latent variables that map similar cases to potentially wildly unalike configurations. One shortcoming of this approach is that it is difficult to peer into these hidden layers to learn exactly how the algorithm knows what it appears to know. Lately, however, it has even become possible to ‘traverse’ these latent spaces - or, rather, to traverse shared state-space according to trajectories in latent space [Mahendran and Vedaldi, 2015]. Such an approach can elucidate machine intuitions and potentially allow them to be captured in human-cognisable form.

Regardless of how it is achieved, the fundamental modus operandi of all these methods is the same: to uncover hidden symmetries that relate a multitude of superficially dissimilar effects to a small number of inferred causes. Minting an original vocabulary for this family of problems may help provoke novel collaborations between apparently disparate fields which share similar problems. We cannot hope to make much progress in isolation.

2.3.2. Suite-modelling for geodynamics: PlanetEngine and Everest¶

Having developed an expressive vocabulary for discussing suite-modelling problems, we now apply it to geodynamics. Our purpose is to constrain the nature of our problem, and determine what tools we require to successfully engage with it.

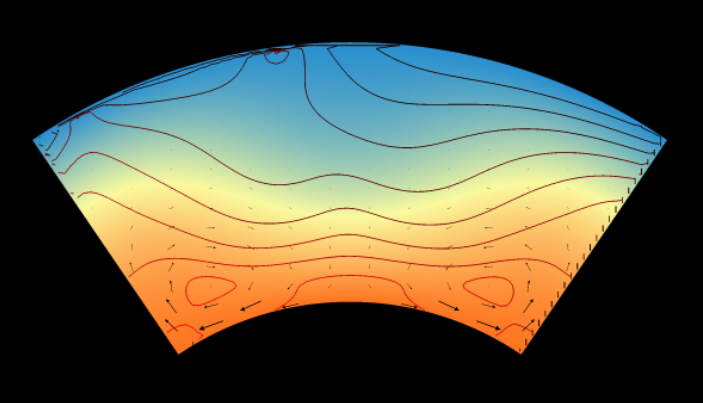

We begin with our intention, which is to ascertain the consequences of some rheological formulation through numerical modelling of mantle convection. This gives us our schema, which will be implemented through Underworld code. We have several options to consider: resolution, timestep size, tolerance. These we must carefully select according to the considerations discussed earlier. The parameters of our schema are manifold, and might include the Rayleigh number \(Ra\), the heating term \(H\), the degree of curvature \(f\), the model aspect ratio \(A\), the maximum viscosity contrast \(\eta_0\), the yielding coefficient \(\tau\), the thermal diffusivity \(\kappa\), the thermal expansivity \(\alpha\), the reference density \(\rho\), and more.

Let us estimate the total number of parameters to be in the order of \(10\) - this compares to only two in the examples presented thus far. At the configuration level, we have some number of state variables, beginning with the temperature field and possibly including the distribution of material phases, the stress history, and others. In two dimensions, each field has in the order of \(N^2\) degrees of freedom, where \(N\) is the resolution; if our resolution is in the order of \(100\), the total number of configurables will be in the order \(10^4\); compare this to the mere two configurables in both the box convection and ‘dark sea’ thought experiments before. Overall, the dimensions of the problem are clearly very much greater than before. Instead of two-dimensional planes describing the case- and state-spaces, these must now be represented by 10-dimensional and 100,000-dimensional hypercubes.

We are already aware of several methods with which to attack such a problem in post-analysis. What we have not discussed is the formidable theoretical, logistical, and organisational challenge of designing, commissioning, overseeing, and aggregating a suite-modelling campaign on this scale. Bespoke tools are called for; tools specific to geodynamics, as well as tools germane to suite-modelling in general. To answer these needs, we introduce PlanetEngine and Everest.

2.3.2.1. PlanetEngine¶

PlanetEngine is a Python-based wrapper for the geodynamics code Underworld which specialises in dimensionless large-scale convection problems in Cartesian and cylindrical geometries. Its primary purpose is to provide successively higher-order objects that implement in code the suite-modelling idiom described above. Accordingly, PlanetEngine’s top-level classes include:

The ‘System’ class, which non-invasively and transparently wraps native Underworld models to provide an easy-to-use higher-level framework for essential model-wrangling operations, including methods for visualisation, initialisation, iteration, observation, checkpointing, loading, and more. The user is invited to flag system inputs as options, parameters, or configurables, which are then separately ‘stamped’ as metadata on all model outputs; apart from this, users are only expected to define ‘update’ and ‘iterate’ functions to enable all higher-level behaviours of the System interface. PlanetEngine systems correspond semantically to the ‘system’ notion introduced in our earlier conceptual model - that is, a system is a particular instance of a particular case, initialised at a particular point in state-space, with the equipped with the ability to integrate through time in accordance with the ‘flow’ of the case. PlanetEngine systems inherit from Everest ‘Iterables’ (see next section), whence they derive most of their useful properties.

A family of abstractly-defined ‘conditions’, which may be either unique - suitable for initialising a model (e.g. a sinusoidal initial condition) - or non-unique - suitable for terminating them (e.g. a steady-state criterion). Conditions can be merged in various ways to create more complex conditions, for example overlaying noise over a conductive geotherm, or applying a horizontal perturbation after applying a vertical one. A special class of conditions allows model state variables to be initialised from the outputs of other models, even across different geometries. PlanetEngine conditions should be thought of as representing localities or regions in state-space, which may either be defined absolutely (i.e. with respect to the origin) or relatively (i.e. with respect to internal metrics, typically timestep or time index, of other systems). PlanetEngine conditions inherit from Everest ‘Booleans’ and hence are valid inputs for Python ‘if’ statements and other typical true-false operations.

An ‘Observer’ class that makes and stores observations about Systems as they iterate through time. Observers are initialised with an observee System and a Condition object that determines when observations should be taken, e.g. every tenth step, or whenever average temperature exceeds a certain threshold. While it is a functionality of Systems to store their own state variables in full at certain user-defined intervals (i.e. checkpointing), it is the role of Observers to gather various derived datas, often of lower dimension than state-space, and typically at a much greater frequency than the checkpointing interval. PlanetEngine is designed to support extremely thorough runtime analyses while minimising any resulting impacts to iteration speed and memory usage. This is invaluable for very large model suites, where disk efficiency and sampling rate are priorities. PlanetEngine observers are Everest ‘Producers’ but not Iterables in their own right; their internal iteration count is incremented only by their associated system.

A ‘Traverse’ class which, when activated, builds a System from provided ingredients (schema, options, parameters, and configurables) and iterates it until a provided terminal condition is recognised. Observers may optionally be attached, which are ‘prompted’ to observe with every iteration (whether they do or not depends on their internal Condition). Traverses in PlanetEngine correspond directly to traverses in the conceptual model: they are journeys from a unique starting point to a non-unique end point according to the ineluctable logic of each case and schema. PlanetEngine traverses are instances of the Everest Task class, hence their basic functionality is to do some ‘indelible work’ (work which is written to disk), which here involves taking at least one initial and one terminal checkpoint. This allows other traverses (or tasks generally) to pick up where the previous traverse left off.

The ‘Campaign’ class: the capstone functionality of PlanetEngine. Campaigns are a kind of Everest Container type which, when iterated, continually produce, execute, and destroy feasibly endless sequences of Traverses. Campaigns are configured to be operated by multiple unaffiliated processes, and even multiple separate devices, simultaneously. Hosted tasks are tracked using a ‘library card’ system which monitors which tasks are currently checked out by other processes, which have been returned complete, which incomplete, and which have been returned after having failed. Campaigns distinguish between failures due to systematic errors (e.g. unacceptable input parameters or misconfigured models) and failures due to extraneous circumstances (e.g. power outages); extensive use of context managers ensures resilience and minimises corruption, which can otherwise become critically problematic when suite populations grow beyond a user’s ability to individually curate them. Most importantly, Campaigns are designed to accept stopping and starting without complaint; resources may be attached and detached at will, or the campaign as a whole suspended for any length of time, without any logistical consequences: when the campaign is renewed, or new resources added, it is guaranteed to continue precisely from where it was before. Campaigns can be provided with static lists of jobs to loop through, but can also be given ‘smart’ assignments that react to model outcomes and change tack accordingly. For instance, a Campaign might be instructed to randomly sample case space until a bifurcation is encountered, then spawn new jobs parallel to that bifurcation to explore its extent. The potential of such a tool may be formidable.

In addition to its headline features, PlanetEngine provides a wide range of extended functionalities for basic Underworld objects. Some are being considered for incorporation into the main Underworld branch; others are more specialised for the particular use case of PlanetEngine. Highlights include:

Specialised visualisation options within and beyond Underworld’s built-in gLucifer interface. In particular, PlanetEngine provides a class that produces cheap, light, and consistent raster images and animations for any given Underworld data object at any given degree of detail - ideal for machine learning applications. PlanetEngine also offers a

quickShowfeature that aggressively searches for an appropriate visualisation strategy for any given input or inputs, automatically constructing projections and dummy variables as required, allowing for very rapid generation of figures. All PlanetEngine visualisations are wrapped in the ‘Fig’ class, which handles disk operations, filenames, and other house-keeping matters.A module that handles mappings between coordinate systems, especially between different annular domains, using a ‘box’ abstraction that normalises coordinates to a unit height/width/length volume, allowing - for instance - the evaluation of Underworld functions or data objects between multiple different meshes. This powers a workhorse

copyFieldfunction that warps data between meshes to within a given tolerance;copyFieldcan also be configured to tile, fade, or mirror spatially referenced data, allowing - for instance - a steady-state condition derived cheaply on a small-aspect coarse mesh to be tiled over a higher-aspect fine mesh.An extensive toolbox of new Underworld ‘function’-type classes with a number of useful features, including lazy evaluation, optional lazy initialisation, optional incremental computation, optional memoisation, automated projection, automatic labelling, hash identification, and extended operator overloading. The new functions include dynamic one-dimensional variables (e.g. minima/maxima, integrals, average), region-limited operations, arbitrarily nested derivatives, stream functions, quantiles, vector operations, masks, normalisation functions, conditionals, filters, clips, and much more. Most of the new functions are built from standard Underworld functions and data types and are consequently robust and C-level efficient; all in turn inherit from the built-in Underworld function class and so may be used anywhere the native inventory is used; however, their design is particularly oriented towards and optimised for run-time analysis. All are tested parallel-safe and memory leak-free.

A series of analysis classes providing single-line invocation for common analytical operations, including Nusselt number, velocity root-mean-square, and vertical stress profile. Like PlanetEngine function objects, analysis objects are hashed and logged at initialisation to avoid needless duplication; they also store their observations locally and are configured to avoid redundant invocation. They export the Underworld function interface and so are safe to use inside Underworld systems: for example, one could define the yield stress in terms of some time-averaged function of the stress history by simply incorporating the appropriate PlanetEngine analyser.

A family of abstractly-defined ‘conditions’, both unique, suitable for initialising a model (e.g. a sinusoidal initial condition), and non-unique, suitable for terminating them (e.g. a steady-state criterion). Conditions can be merged in various ways to create more complex conditions, for example overlaying noise over a conductive geotherm, or applying a horizontal perturbation after applying a vertical one. A special class of conditions allows model state variables to be initialised from the outputs of other models, even across different geometries.

A system for attaching boundary and range assumptions directly to Underworld data objects, enabling automatic clipping and normalisation of hosted data whenever changes are detected.

Together, these features constitute a meaningful advance in the state-of-the-art, not only for Underworld, but for computational geodynamics generally.

2.3.2.2. Everest¶

Everest is a free, open-source Python package developed as a part of this thesis for the express purpose of organising and analysing massive suite-modelling campaigns. Fundamentally, it comprises two systems:

A library of Python classes which may be wrapped around user code to provide a high-level standardised interface for common suite-modelling operations.

A file format under HDF5 which provides disk correlates for objects inheriting from Everest and supports simultaneous and parallel access for any number of processes at once.

The core Everest class is the so-called ‘Built’. The general principle of the Built is to provide a framework that allows a complete working copy of the environment in which some data was produced to be recreated, live, just as it originally was, using only some attached metadata. Modules which define a class that inherits from Built can be ‘anchored’ to an HDF5 file on disk, referred to as a ‘frame’; the class can then be loaded directly from the frame even if the original module is no longer present. Each Built is assigned a unique hashed identity based on the complete code of the module defining it, which in turn provides the name of the HDF ‘group’ that the object is filed under in the archive: a simple ‘word hash’ facility is provided that generates memorable English-pronouncable names based on each hash, for human convenience. If an instantiated Built is anchored, the parameters that initialised the instance are also saved and hashed: supported parameters for saving include all the standard Python and NumPy data types and containers, all objects that provide a ‘Pickling’ interface (user-defined or otherwise), as well as any class or class instance that inherits from Built. The use of hashes ensures that even the slightest difference between two classes or instances will be recorded for posterity, so that large projects can never be corrupted by the introduction of small errors and new objects never overwrite, but instead coordinate with, any previously anchored objects which are identical; while the storing of ample metadata with each Built ensures complete reproducibility within the scope of the environment that Everest is made aware of. The use of hash identities means that all builts can be stored equally as top-level objects in the host frame without any negative organisational consequences: this is virtuous because an appropriate hierarchical structure to explore one particular scientific query may be entirely counterproductive even for an only slightly different query. Flat structures allow the most efficient organisational structure for each use-case to be mapped to the database on a case-by-case basis, without needlessly and perhaps irreversibly inflecting the database for one purpose alone.

Various subclasses to Built are provided which add further functionality. The Producer class adds support for producing and storing arbitrary data: outputs can be stored in memory, saved to the frame, or automatically configured to save at certain intervals depending on memory usage, clock time, or other factors; data can be sorted either in the order it is saved or in order of some provided index, in which case inadvertent duplication of entries is detected and prevented. The Iterator class, a kind of Cycler or callable Built, is designed to be inherited by intrinsically iterable models - for example, the mantle convection models discussed here which iterate through discrete time steps - and provides for saving and loading of states, ‘bouncing’ between states, iterating between step counts, and many other useful features. (An anchored Iterator is always aware of what states have previously been saved to the frame, even if they have been saved by completely disconnected processes, such that multiple devices can do work on the same model without clashing.) The State class provides for the definition of abstract conditions which can be evaluated with respect to other Builts; States can then be used to trigger Enactors, Conditions, Inquirers, and other objects, progressively supporting higher levels of coordination. The highest level of abstraction currently provided by Everest is the Task class, which permutes a given Cycler until a stipulated Condition is met; because the Task is itself both a Cycler and a Condition, it can be used as either input of another Task, allowing the construction of arbitrarily complex trees of tasks. Tasks also provide the very useful feature of being able to instantiate themselves as subprocesses, so that one process can serve as a master for many others: any errors are logged and returned to the master task where they are handled as per the user’s requirements. Carrying out a task in a subprocess also ensures that the slate is wiped clean for any future task: this is particularly important for memory management in large model suites, as the system-level garbage collectors are much more thorough than those provided at the Python or Cython levels.

Everest also provides bespoke Writer and Reader classes for the HDF5-based ‘frame’ to which Builts may optionally be anchored. The Writer automatically decides what sort of HDF5 object to create, and where, based on intuitive Python-level syntax: dictionaries are mapped to groups, for instance, while NumPy arrays are mapped to datasets or attributes as appropriate. The Writer also manages access to the frame using a lock file and a prime-number based ‘window of access’ protocol, so that simultaneous write operations never occur regardless of how many processes or devices attempt access. The Reader, conversely, supports multiple simultaneous access through the new HDF5 single-write-simultaneous-read protocol. The Reader exports the Python ‘index’ and ‘slice’ syntaxes and is designed to provide fluid access to archived data no matter how hefty the frame becomes. It comes with its own search algorithm with wildcard support, allowing quick and easy filtering according to data type, Built type, hash identity, value, or any other data: search is recursive to a prescribed depth and is careful to appropriately manage loops where HDF5 links are present. It operates in two modes: ‘soft’, which returns summaries of query results, and ‘hard’, which returns all results and loads any pickled objects, Built classes, and Built instances which are included. This latter feature makes it very quick and easy to load, for example, one particular model out of thousands by referencing merely one or two of its input parameters, some faintly recalled property of its output data, and the hash ID of the associated type. Algorithmic access is of course also supported - iterating through models to produce a figure, for instance, or to conduct some test whose outcome could then be tagged to each model as metadata.

Finally, Everest also describes a ‘Scope’ class which is in effect an abstract statement about the range of ‘builts’, and the range of indices within the outputs of each built, for which some boolean statement evaluates true. Scopes are produced by indexing Readers with ‘Fetch’ requests, somewhat similar to SQL queries; the resultant ‘scope’ is instantiated with the metadata of the request that produced it, ensuring that all data travels with its full and proper context. Once instantiated, however, a scope is free to travel independent of the reader or indeed the frame over which it was defined: this is possible thanks to the use of unique hash identities for all Everest builts of equivalent type and initialisation profile. Once the desired Scope has been acquired, it can be used to ‘slice’ into a frame (once again through the Reader interface) to pull the indicated data in full. The Scope protocol allows easy and rapid aggregation of very large datasets across arbitrarily broad model suites. In tandem with standard disk-based analysis packages like Dask, they are intended to provide a pain-free solution for wrangling model data all the way up to the terabyte scale.